GPT-3 and SEO: Overview

If you’re reading this article, you’re probably already pretty familiar with the basic idea of GPT-3 and likely have played around with it. The focus of this article is the impact we foresee of GPT-3 and SEO as converging trends.

I’m not going to spend too much time on the mechanics of how GPT-3 and ChatGPT work here, but if this is an area of interest for you, I’ll share a few links that can help you get more familiar with it:

- Beginners guide to the GPT-3 API with Python

- A nice overview of GPT-3 and the surrounding AI tech landscape

- OpenAI’s own documentation on the topic

- Bonus: not related to GPT-3 specifically, but a great introduction to NLP (developer-oriented)

Most people, of course, probably have used the ChatGPT interface more than the API when it comes to playing around with its capabilities. What you’ll immediately bump into when using the ChatGPT interface is the fact that it’s not connected to the external internet beyond being able to serve answers to your questions and, frankly, that’s probably a very good thing at this juncture. The question on people’s minds in our industry is, how is all this going to change SEO and search as a whole?

Some powerful SEO examples

We can begin to explore this by looking at the way that it provides solutions for tasks commonly related to SEO.

First, let’s ask ChatGPT what it thinks about its role in SEO

Pretty hard to disagree with that, right?

We’ll talk more about this but this is a fascinating response so far.

Next, let’s show off some of the areas where GPT-3 and especially the Chat interface can help to quickly accomplish certain types of tasks for SEO.

We’re going to start by generating some keyword suggestions so we can feed them to GPT-3 and examine the results.

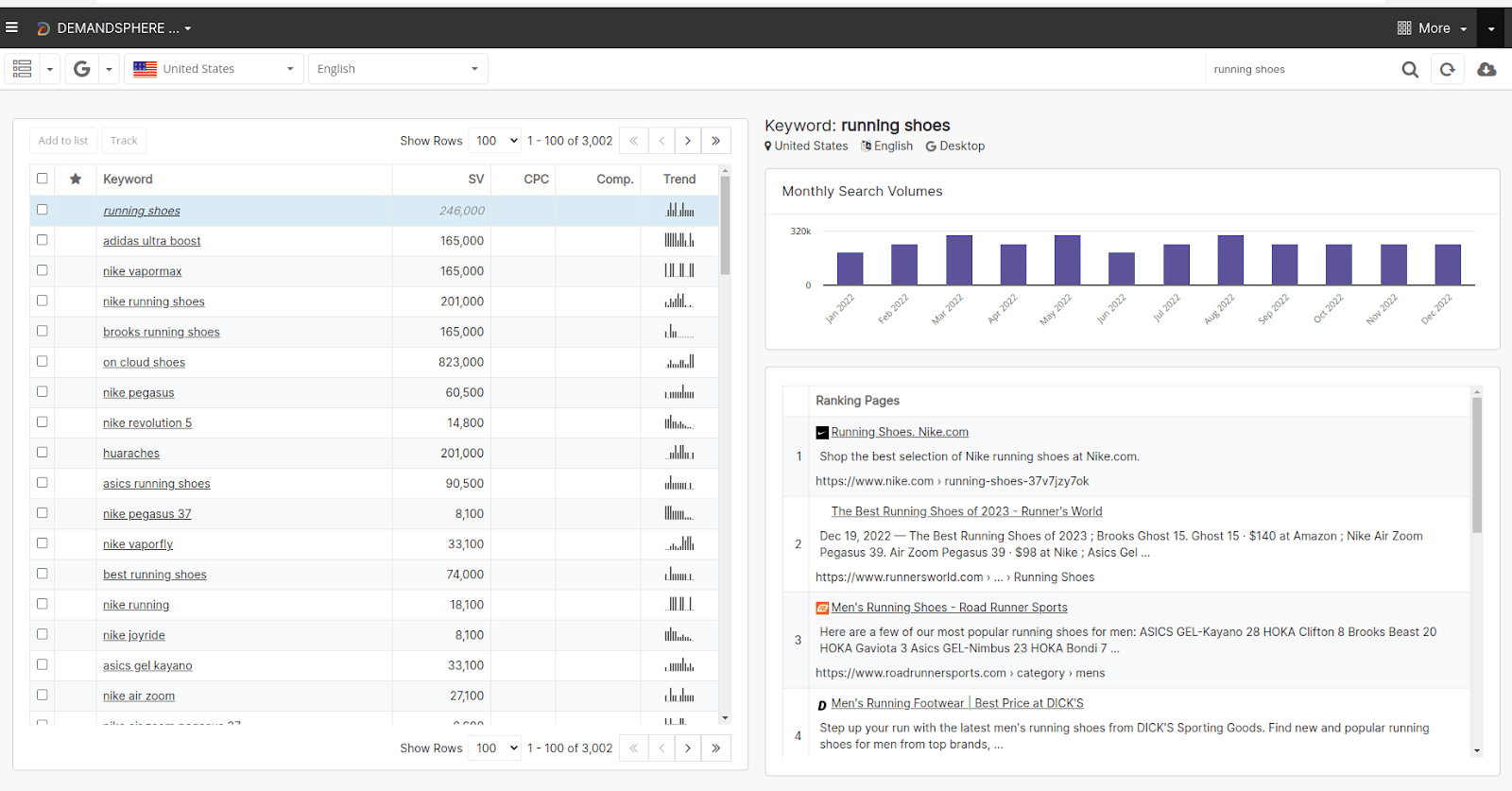

We’ll go with the topic of “running shoes”:

We get roughly 3,000 keyword suggestions from this general topic. Let’s download them and see if ChatGPT will take all of them.

Nope. Let’s try with 250 keywords.

Ok, let’s get to work.

Keyword grouping is one of the hardest and yet most overlooked activities when planning SEO projects, simply because there are so many facets to the data of even a single keyword.

It comes down to which facet matters to the users in the business and, if you have multiple users, then you’ll get an equal number of opinions. In larger organizations, there are entire teams dedicated to building knowledge taxonomies but SEO teams typically don’t have this luxury and are forced to do a lot of work with a small number of team members.

Can keyword grouping be made easier?

For this reason, we have some automation tools in our platform to help with this and are always adding more. Based on this experience, I was curious to see how relevant the groupings that GPT-3 would generate based on some minimal instructions.

And ChatGPT stopped there. This is quite typical where the service will stop far short of what you requested, obviously to save computing resources, which is quite understandable for something that is freely available at the moment.

So I prompted a little further and got this:

And it bombed out again due to server capacity. That being said, these reasonable attempts at grouping the keywords I provided with only the bare minimum of instructions.

Next, let’s see how it does with generating some Python code to do the same thing.

Code generation for automated keyword grouping

First, let’s trim down the size of the list to 25 keywords because GPT-3 kept timing out.

Then I told it do write a Python script that groups the list of keywords based on topical relevancy:

Ok, so it did write a Python script to group these keywords, but any developer will immediately notice that it is a very simplistic approach, based on creating a small dictionary or library of potential groups based on word mappings.

This approach in and of itself is not bad at all because it is easy to extend and can be easily explained to anyone on the team of stakeholders.

But, I wanted to see if it could do something a little more dynamic:

I had to cajole it a bit to get it to write the code:

And finally, here we are:

Ok, fair enough. There is a lot missing here but ChatGPT itself pointed out that there were many more dependencies and preparatory work needed to build a working version of a good pipeline for this process but it does give a rough overview of how this could work.

This is well-trod ground and there are many great examples out there for people who want to do this themselves.

How to make schema generation work for SEOs with GPT-3

Next, I wanted to see how well it would generate JSON-LD structured data, since we’re talking about items that you’d typically see in an e-commerce website. Being able to automate this process based on data in your catalog is one of the stronger use cases for something like GPT-3 in automating content and / or markup for SEO.

It also doesn’t, in my view, really violate the “organic content” principle that I will discuss further below.

Great. Obviously it can be expanded but it’s a nice way to prototype some quick structures.

Let’s expand it out further to encompass a graph or ontology of categories and products for category listing pages in an e-commerce context.

We can run this through the JSON-LD playground and see that we get valid results.

Of course, you may want to tune this according to the structure of your site but this is a very impressive output based on such high-level requirements.

Search intent mapping

Here is another one that is a very helpful filter when looking at keywords, which is the concept of search intent.

I was curious to see how much ChatGPT knew about this topic:

Solid. Let’s see what it comes up with for my list of sample keywords:

Pretty good, especially since these are all product names that do indeed tend toward commercial / transactional behavior.

Build keyword lists

Next, I wanted to see how it did building lists of keywords. This is another area of SEO, which anyone in the industry will immediately tell you, is one of the hardest and most time-consuming aspects of the business. It’s not that it is hard to get lists of keywords, that part is easy. The hard part is getting ideas for keywords that are relevant, drive traffic, and also are not impossible to rank well for.

I didn’t expect GPT to be able to meet all of those requirements, particularly because it is made very clear to you that it’s not connected to any external data sources. As a result, there is no way to to measure relevance (aside from the linguistic angle) or know anything about search volumes or ranking difficulties.

Ok. Well, they are keywords.

One nice thing is the ease with which things can be translated to other languages, in the same context.

Translate them to Japanese:

There you go. The same keywords, quickly translated to Japanese.

Do note, however, that there is a HUGE difference between translation and localization, especially when it comes to SEO.

I don’t see good solutions yet coming from any of these AI tools to solve the localization problem with a high degree of cultural relevance but the translation part does at least get you on the path.

Extract keywords with GPT-3

Another notoriously hard problem both in SEO automation and NLP in general is keyword or term extraction.

Again, it’s easy to get words from text. The question is always, how relevant are the words that are extracted.

These are quite solid results.

Writing a content outline with ChatGPT

Now, we’re starting to move into more controversial topics: content generation.

First, let’s start with what I believe is a still relatively benign activity, with some serious caveats, and that is generating outlines for new content.

The main caveat here is that, if you simply use a tool like this to generate an outline and hand it off to some outsourced content writer, you’re probably going to get pretty vanilla content, at best. You might even get AI-generated content back! (more on that later)

Let’s see how it does:

Pretty boring but it is an outline and might provide some basic writing prompts. You can always make more specific requests.

Writing a blog post based on an outline

Next, let’s use the generated outline as a prompt for writing an actual blog post. This is where people are going to get in trouble in the coming months and years.

It kept stopping after a few sections, clearly not wanting to spend the CPU time on creating the whole thing.

But you get the basic idea.

The big one: writing a blog post from scratch

Here’s what many would consider to the be the Holy Grail when it comes to content generation (I’m not a fan, I’ll be honest), but let’s see what we get:

677 words, not too bad.

It’s an ok start for an article but you can see how the web can very quickly and easily be polluted by what is ultimately low-tier filler content designed to find its way into Google’s indexes.

For those who care about energy consumption on a global scale, one could make the argument that AI-generated content will contribute to an incredible increase in energy consumption simply to enable the search engines to identify generated vs. created content.

Let’s look at another task:

Generating titles and meta descriptions based on keywords, emphasizing the keyword in both fields

Coming up with good titles and meta descriptions for your content is another task that can be time consuming. I figured ChatGPT would do a decent job of this due to the shorter length of the text required:

Could be worse.

And for meta description:

Again, not bad for a robot.

At a minimum, it is a way to eliminate duplicate and empty title and meta description tags.

Of course, Google does sometimes rewrite both of these fields in the SERP already and this is likely to increase based on usage and other metrics.

That said, this could still be a pretty solid use case for at least fleshing out a bunch of titles and meta descriptions (we’ll discuss the moral / ethical / creative questions below) pretty quickly.

This would get even more interesting in e-commerce use cases, especially using the API, when merged with data from the catalog:

Much more interesting.

It still requires some tuning, but once you get into automatically generating text, tuning is what always requires the most time and energy.

It can require so much time and energy that you have to be aware of your point of diminishing returns relative to the perceived time and cost savings that you were initially banking on.

Closed-loop thinking vs. Open-loop thinking

Now is a good time to wax philosophical and start coming up with some concepts to evaluate the ethical, moral, and metaphysical questions that inevitably arise in these discussions.

Let’s start off with a real high falutin’ question and see what ChatGPT says:

We’re off to a good start on the topic.

Let’s ask it about one of the central debates in AI:

Pretty good answer.

The next two questions were a little out there but the mood was upon me so I went with it.

And, finally:

Some further clarifications and questions:

So, that turned out to be a pretty interesting discussion. The main point and takeaway is the idea that GPT and similar models are ultimately gleaning content that was created by humans.

This creates a closed-loop of available thoughts and words that it can choose from.

The societal risk from having a web polluted with massive amounts of AI-generated content is that a great filtering occurs from the natural thoughts that flow out of the experience of humanity.

We’re already dealing with the effects of massively reduced literary skills and vocabularies of modern writers (on the web at least), due in no small part of the “mercantilization” of writing that has already occurred (ironically or otherwise) because of the preference by search engines for easily parsable articles and, in particular, “listicles” and other such tragedies.

A novelty-seeking mechanism in search algorithms?

A long-standing theory that I’ve had is that search engines do function in some similar ways, particularly with the advent of deep learning, to the learning mechanism of the human brain in that they seek “novelty” and that this novelty-seeking mechanism is what ultimately (ideally, not always in reality) gets expressed in the results of the ranking algorithms that produce their results.

If this theory is true, then this could be one of the major failing points of AI-generated content. You simply don’t get novel results.

Remixes, yes. Novelty, no.

The dangers of misinformation and potential compliance issues

I’m not going to post them here today, but I’ve seen some cases where ChatGPT very confidently provides answers that are outright, simply, and factually incorrect.

The danger of misinformation leaking into content via GPT is quite high because it sounds so confident.

This can create all sorts of hazards for online retailers, from product liability to cost and inventory issues.

In other industries, such as anything related to finance, insurance, or healthcare, using auto-generated content is probably a very bad idea no matter how you look at it.

The ethics of generated vs. created content

Even before there were any working systems in the public for automatically generated content, the word “content generation” became quite prevalent in the digital marketing world. This term always felt dirty because, at the time anyway, we were talking about humans writing and creating content, not “generating” it.

I put it in the same general category as referring to employees as “resources.”

They are people, not resources.

As a result, I’ve always corrected this term to content creation and now I find myself using it to further differentiate human created content vs. machine generated content.

If we’re talking about AI, ok, you can say “generated.” If a human wrote it, say “created.”

Where things begin to merge, is when you move from talking about a single article or other piece of content, where we have this “created” vs. “generated” dichotomy, into the production process. If you’re going to scale to the level required to compete in hot markets, you’re going to need to build a creation production operation. But this is still most commonly done (as it should be, IMO) with created content, but making smart use of tools along the way to optimize the workflow. That is a topic for another time.

100% organic free-range content

This matters also from a technical and a business point of view.

At this stage, it is hard to predict exactly what Google and the other search engines will do and how they will evaluate the question of content that is generated vs. created.

My personal prediction, however, is that within a few years, aggressive moves by Google to identify and penalize generated content in its rankings.

This assumes, of course, that search interfaces will even look the same 3-5 years from now.

There is certainly no guarantee of that as anyone who has used ChatGPT immediately noticed that it provides entirely new ways of interacting with information.

At the same time, we can also expect Google to come out with new AI products of its own and it could very well be capable of annihilating both ChatGPT and GPT-3 in future iterations.

We won’t know what that will look like until we see it.

There was a very good article published in the last week or so, on this topic of truly organic content, called “Certified, 100% AI-Free Organic Content.”

It’s definitely worth the read.

The other envisions a world in which both humans and search engines want to filter for content created by humans. He proposes the idea of a meta tag or some other sort of HTML tag to identify AI vs. created content.

I like the flexibility of this and I think there are even more opportunities to do something similar at the site level.

A thought experiment for self-certification of 100% organic content – organic.txt and organic.xml / .json for SEOs and site owners

During a discussion of the above-linked article on Hacker News, I mentioned that the idea of a site-wide file could be an interesting way for site-owners to self-certify that some or all of their content was truly organic.

We already have standards such as robots.txt and XML sitemaps to provide indicators to the search engines how the site is structured and what can be expected during crawls. There is also security.txt and others.

It’s easy to imagine a similar file called organic.txt that is structured similarly to a robots.txt file with directives that indicate which, or if all, of the site is completely organic. An extension to sitemap.xml could also add an attribute doing the same thing and, of course, this could also be achieved through meta directives at the file level.

How likely this is to happen will ultimately depend on how much the search engines, particularly Google, profess to care. It would save them a ton of compute time and money, however, if a reliable market for these identifiers were established. Sites that violated the trust of these declarations could be harshly penalized in the rankings and the web would, frankly, probably be a better place as a result.

You can use GPT-3 as an advisor instead of a writer to get good coverage

All of that said, I still see a lot of value for automating SEO tasks in the sorts of language models that GPT-3 represent, as well as the genius of the ChatGPT interface. It’s an easy demonstration of their power.

Based on my own usage and some of the experiments above, I think that ChatGPT can easily fill the role of prototyping tool, data explorer, and advisor on some basic concepts. It’s almost more like a dynamic Wikipedia than it is a search engine, at this stage anyway.

Going forward

To round out what has become a very long blog post, I came away with a number of key takeaways.

In general, I don’t think ChatGPT as an interface is ready or even designed to handle large datasets. This can certainly change, of course, as the platform evolves and as paid plans are introduced.

The Chat interface is genius on many levels, as demonstrated above.

This will ultimately lead to the further commodification of AI and I already roll my eyes most of the time when I see other vendors and SaaS companies in general talk about their AI and ML capabilities. The technology has become so pervasive that it’s almost redundant to mention it. This trend will only continue.

Some of the task automation above demonstrates that ChatGPT can be very useful for task acceleration and ideation but certainly not a great fit for the finished product in a range of professional settings.

I think there is a chance that it actually ends up having a larger impact on the Business Intelligence (BI) and Knowledge Management (KM) industries than it does search, but we will see. The possibilities for new forms of advertising are, to my chagrin, limitless.

How about GPT-3 itself for use within SEO? There are a lot of opportunities for automation and use in classification, extraction, and ideation. For content generation? I certainly wouldn’t recommend it.

In the SEO world, SEOs and executives need to be very disciplined when evaluating this technology. It looks great but it can potentially open your company up to liabilities that weren’t a big risk before. There will be a need to monitor and evaluate new content as it is being introduced to determine whether it was created or generated.